What is AI, Really?

5/3/2024

Before we learn to build with AI, let us first understand who we are building with.

🌅 The Gurukul Framing

Imagine you walk into a peaceful ashram. The Guru looks at you and says:

“You wish to learn how to shape the future with AI. But tell me first — what is AI?”

Would you say it's:

- Just a program?

- A fancy search engine?

- Something that mimics the human brain?

The truth is — AI is none of these, and all of these.

Let’s uncover this with a story.

📖 The Story of the Curious Mirror

In a quiet village, there was a magical mirror. You could walk up to it and say anything — a question, a worry, an idea — and it would respond like a wise old friend.

- Some villagers used it to find lost goats.

- Some used it to compose poetry.

- Some just sat with it when they felt lonely.

The mirror never judged. It simply listened… and responded.

This mirror is what AI feels like today.

It reflects what we say, reshapes it through the lens of all it has “read,”

and offers a response that feels almost human.

But here’s the key truth:

AI is not intelligent the way a human is.

It has no understanding. No intent. No heart.

But it does have pattern memory, structure, and incredible response skill.

🧠 So What Is AI? (Simple Definition)

AI is a system that uses data + logic to mimic human-like abilities, like:

- Answering questions

- Recognizing images

- Writing text

- Predicting actions

In our context, we’re focusing on:

✨ Generative AI – AI that can create text, images, code, etc.

🤖 Large Language Models (LLMs) – models trained on huge amounts of text to predict the next word (think GPT-4).

🧩 Analogy: The Parrot Sage

Think of LLMs like a parrot raised in a library of sages:

- It repeats what it has heard,

- Mixes things cleverly,

- Sometimes sounds deeply wise…

But it doesn’t know anything. It has no self-awareness—

all it has is structure + patterns.

It’s your prompts, your intent, and your direction

that bring out wisdom from this parrot.

🔧 What Powers AI Under the Hood?

So what makes a machine look intelligent?

Let’s break it into three key components that power today’s AI:

1. Massive Data + Patterns

LLMs like GPT-4 are trained on:

- Books

- Wikipedia

- Websites

- Code

- Research papers

- Conversations

Imagine a machine that has “read” more than any human ever has —

but doesn’t understand a single word like you do.

Instead, it learns the patterns:

If sentence A ends with ‘sun rises in’, then ‘the east’ is likely to follow.

2. Neural Networks (Transformers)

At its heart is a mathematical structure called a Transformer —

the architecture behind GPT, BERT, and others.

Think of a sentence as a string of beads.

The model first breaks this string into beads (tokens), each carrying a fragment of meaning.

Then it examines how each bead relates to every other, using something called attention.

Finally, it calculates: 👉 “Which bead should come next to keep this necklace coherent?”

In machine terms:

Sentence → Tokens → Attention layers → Next-token prediction

Let’s try this:

Input: “Shree Krishna said,”

Output: “what you do, do it as an offering to me.”

The model doesn’t “know” who Krishna is —

it’s simply seen that pattern before in sacred texts.

So it picks the most likely continuation based on token-level patterns.

3. Tokens, Not Words

Here’s a key idea: AI doesn’t understand words the way we do. It understands pieces of words—called tokens.

Token = a fragment of text that holds predictive value.

The model doesn’t know what “words” are—it only recognizes useful fragments, based on patterns it has seen millions of times.

Some examples:

- “Krishna” → 1 token

- “Intelligence” → 2 tokens: Int, elligence

- “Unbelievable” → 3 tokens: Un, believ, able

Some tokens may look like gibberish—like elligence—but they exist because they help the model break long, common words into efficient chunks.

The model isn’t trying to make sense—it’s trying to save space.

The tokenizer doesn’t care about grammar or meaning—it only cares about character patterns it has seen a lot.

Some words like “sun” or “karma” are so common, the model sees them as single tokens.

But longer or uncommon words like “indivisible” may be broken into pieces—because the model has seen those pieces more often than the whole (there is some additional logic behind this, but we can circle back later. Read Byte Pair Encoding if interested).

It’s like AI doesn’t read words; it recognizes familiar fragments.

❓ Why Does This Matter?

Because every time you send a prompt or get a response, you’re spending tokens.

- Models have token limits: 4k, 8k, 32k tokens → how much the model can “see” at once

- Cost is per token, too!

In short: Tokens are the currency of LLMs, and understanding them helps you write smarter prompts.

👉 We’ll revisit this deeply in Day 3: Tokenization & Cost Awareness.

🎯 How Does AI Know What Matters in a Sentence?

– Understanding “Attention” with Intuition and Insight

🧘 Intuition: The Classroom Analogy

Imagine you're in a classroom. A teacher asks, “What is dharma?” You hear that word and your mind scans:

- “Where have I heard this before?”

- “Oh, in that Gita verse!”

- “Also in that podcast last week…”

- “Also when Arjuna was confused.”

- “And wasn’t there something about duty and detachment?”

You’re giving attention to certain past thoughts to understand the present. Your mind is not just reacting—it’s assigning weight to memories. That’s attention.

That’s what attention layers in AI do: For every token, they ask: “Which previous tokens are most important to focus on?”

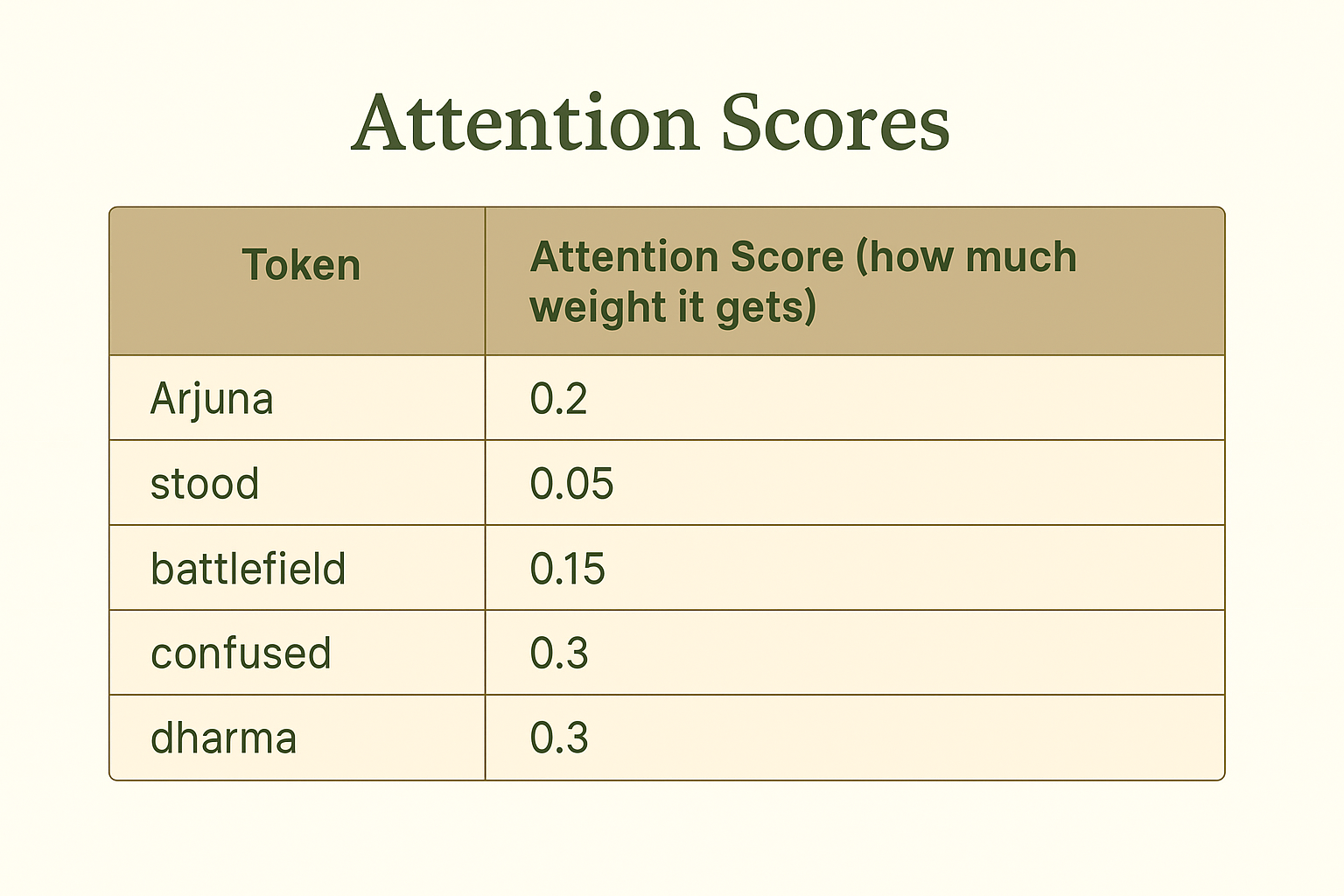

🧪 A Simple Example: Attention Scores

Let’s take a real sentence, and then show how attention plays out.

Sentence:

Arjuna stood in the battlefield, confused about his dharma.

Now the model wants to predict the next word after “dharma.” Let’s say we’re trying to predict the next word: “Krishna”

But before it does that, it looks backward and says:

Confused” and “dharma” get the highest weights,

because they are most relevant to the next word.

So the model says:

Hmm… confusion about dharma?

Oh! I've seen this before — likely continuation is: ‘Krishna said…’

This is how AI uses attention:

It doesn’t read left to right. It creates a map of relevance, then predicts.

🧠 Technically Speaking

- Attention assigns scores to every pair of tokens

- These scores say how relevant one token is to another

- Output = weighted sum of these connections

- This allows the model to see the whole sentence at once and decide what matters most

Simple Takeaway:

Attention = AI deciding what parts of the sentence matter most

It lets the model focus – like a wise reader who reads between the lines.

🛠️ Hands-On Task: Explore the Mind of AI

Use OpenAI’s tokenizer playground:

👉 Open the tokenizer playground

Try pasting:

- A shloka from the Gita

- A code snippet

- A poem

Observe:

- How tokens break

- How cost grows

- How LLMs “see” your input

🛠️ Hands-On: Try Your First Prompt

Use this prompt with OpenAI or Poe or Hugging Face (or the playground):

You are an ancient Indian guru who speaks in short, poetic insights. A student asks: "What is the meaning of intelligence?" – How do you respond?

Now try this one:

You are a Silicon Valley engineer giving a TED Talk. Define Artificial Intelligence in 3 sentences.

👉 Observe:

-

How the tone changes

-

How the knowledge base stays the same, but the delivery differs

-

This is the power of prompting — and your role as the driver.

✍️ Reflection for Reader

What is intelligence to you — bookish knowledge, sharp thinking, or soulful wisdom?

Write your answer in two lines and save it. We’ll revisit this in Week 4 when we build our project.

✨ Takeaway Summary

| Concept | Insight |

|---|---|

| AI | Mimics intelligence using patterns, not understanding |

| LLM | Trained on huge data to predict next word/token |

| Transformer | Neural network architecture using attention |

| Tokens | Building blocks of language inside models |

| Who drives the soul? | You, the prompt designer |

The river remembers every bend,

just as AI remembers every pattern.

But it is your intent — quiet yet powerful —

that decides where the current will go.